Ubuntu 读取群辉硬盘内数据

0x01 前言

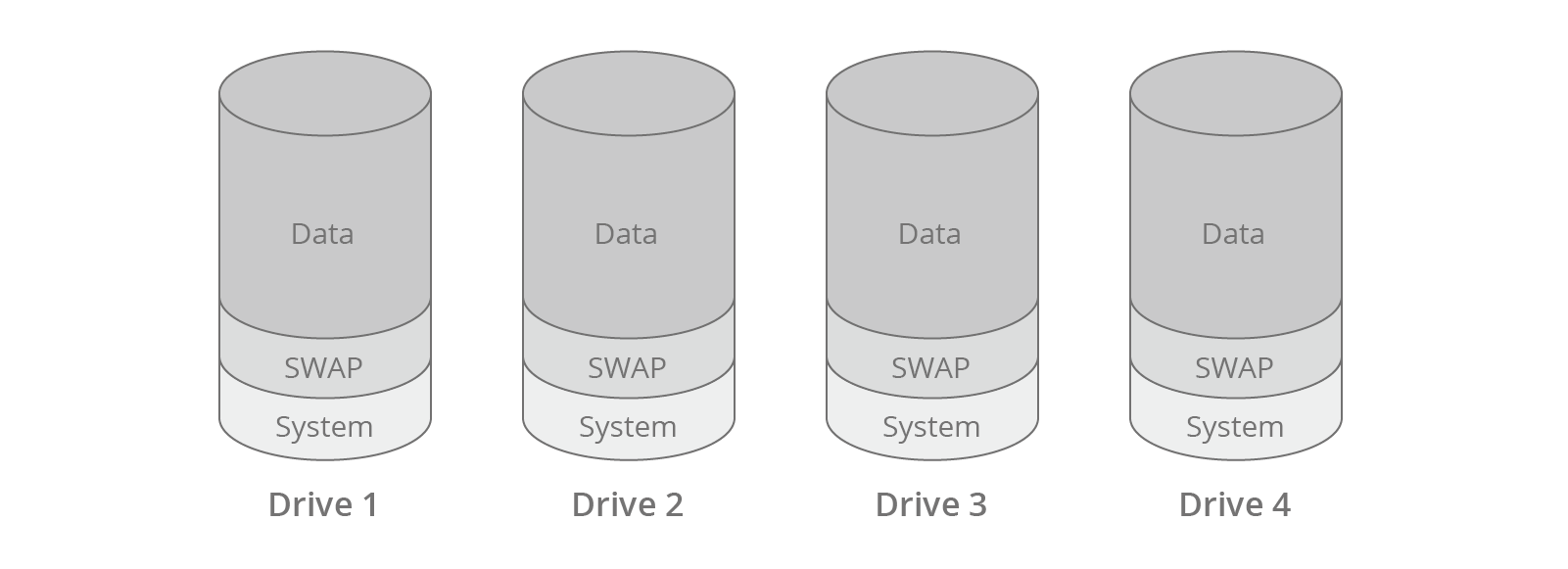

一直怕黑群晖引导失败后,数据读不出来,所以研究了下群辉数据存储方式,其实就是mdadm+lvm2。首先把一块硬盘例如sdX分成3个区,第一个区sdX1存放dsm系统,第二个分区sdX2为swap,sdX3就是用户数据。

DSM跟swap用的是RAID1,每块硬盘上都会存在,每次使用的时候只使用硬盘序列号最小的那一块硬盘。

0x02 关于群辉的硬盘分区

Synology NAS 将每块硬盘划分为三个分区:

系统分区(sdX1):存放DSM操作系统

SWAP分区(sdX2):用作交换空间SAWP

数据分区(sdX3):存储用户数据

详细:https://kb.synology.cn/zh-cn/DSM/tutorial/What_are_drive_partitions

0x03 为什么要用 Ubuntu18.04-Desktop?读取群辉硬盘数据

需要用到的镜像版本为:ubuntu-18.04-desktop-amd64.iso,经过我的测试,只有ubuntu18.04-desktop这个版本可以,18.04.6不行,18.04-live-server也不行。

其他版本挂载会提示如下:

root@ubuntu22:~# mount /dev/vg1/volume_1 /mnt/volume_1

mount: /mnt/volume_1: wrong fs type, bad option, bad superblock on /dev/mapper/vg1-volume_1, missing codepage or helper program, or other error.但是我网上发现有其他的方法:

# **方法1:**擦除超级块(极其不推荐!就算擦除也不一定就可以读到,而且容易损坏)

mdadm --zero-superblock on /dev/vg2/volume_2

# **方法2:**需要为btrfs文件系统,运行运行恢复命令

btrfs restore /dev/vg2/volume_2 /mnt/volume_2 0x04 正文开始

# 安装必要套件

root@ubuntu20:~# apt-get install -y mdadm lvm2

# -A 组装激活已存在的RAID整列;-s 扫描 /etc/mdadm.conf 或 /proc/mdstat 自动识别阵列;-f强制。

root@ubuntu20:~# mdadm -As

# 激活所有卷组跟逻辑卷

vgchange -ay

# 查看硬盘

root@ubuntu20:~# lsblk -f

NAME FSTYPE LABEL UUID FSAVAIL FSUSE% MOUNTPOINT

loop0 squashfs 0 100% /snap/bare/5

loop1 squashfs 0 100% /snap/core20/1828

loop2 squashfs 0 100% /snap/gnome-3-38-2004/119

loop3 squashfs 0 100% /snap/gtk-common-themes/1535

loop4 squashfs 0 100% /snap/snapd/18357

loop5 squashfs 0 100% /snap/snap-store/638

loop6 squashfs 0 100% /snap/snapd/25577

loop7 0 100% /snap/gnome-3-38-2004/143

sda

├─sda1 linux_raid_member SynologyNAS:0 b3f7db81-e608-8f5d-b7ea-171553fb56a2

│ └─md0 ext4 1.44.1-72806 2337b02b-84c2-4a27-b90f-35ab9db88723

├─sda2 linux_raid_member SynologyNAS:1 87297c96-e299-6e97-b225-19835a92d19d

│ └─md1 swap cdf8ce23-e572-4f18-abfc-fa97a23efb8a

└─sda3 linux_raid_member SA6400:2 af83396f-9f2e-b8ac-097a-2aa85aeb9c9f

└─md127 LVM2_member f3db44-lvod-Pwn5-VgV1-rtFl-BoBA-vf0pa8

├─vg1-syno_vg_reserved_area

│

└─vg1-volume_1 btrfs 2025.11.28-08:10:24 v72806 b3cae3d6-b3ec-4b6c-891b-0e7a7b746232

sdb

├─sdb1 linux_raid_member SynologyNAS:0 b3f7db81-e608-8f5d-b7ea-171553fb56a2

│ └─md0 ext4 1.44.1-72806 2337b02b-84c2-4a27-b90f-35ab9db88723

├─sdb2 linux_raid_member SynologyNAS:1 87297c96-e299-6e97-b225-19835a92d19d

│ └─md1 swap cdf8ce23-e572-4f18-abfc-fa97a23efb8a

└─sdb3 linux_raid_member SA6400:2 af83396f-9f2e-b8ac-097a-2aa85aeb9c9f

└─md127 LVM2_member f3db44-lvod-Pwn5-VgV1-rtFl-BoBA-vf0pa8

├─vg1-syno_vg_reserved_area

│

└─vg1-volume_1 btrfs 2025.11.28-08:10:24 v72806 b3cae3d6-b3ec-4b6c-891b-0e7a7b746232

sdc

├─sdc1 linux_raid_member SynologyNAS:0 b3f7db81-e608-8f5d-b7ea-171553fb56a2

│ └─md0 ext4 1.44.1-72806 2337b02b-84c2-4a27-b90f-35ab9db88723

├─sdc2 linux_raid_member SynologyNAS:1 87297c96-e299-6e97-b225-19835a92d19d

│ └─md1 swap cdf8ce23-e572-4f18-abfc-fa97a23efb8a

└─sdc3 linux_raid_member SA6400:2 af83396f-9f2e-b8ac-097a-2aa85aeb9c9f

└─md127 LVM2_member f3db44-lvod-Pwn5-VgV1-rtFl-BoBA-vf0pa8

├─vg1-syno_vg_reserved_area

│

└─vg1-volume_1 btrfs 2025.11.28-08:10:24 v72806 b3cae3d6-b3ec-4b6c-891b-0e7a7b746232

sdd

├─sdd1 vfat 6C8A-FED4 511M 0% /boot/efi

├─sdd2

└─sdd5 ext4 af04c6ef-64b5-426d-a099-63cadb3b4c9f 83G 10% /

sr0 iso9660 Ubuntu 20.04.6 LTS amd64 2023-03-16-15-57-27-00

# 查看软raid;

# md0:是dsm系统分区,md1:是swap分区,用raid1让每个硬盘上的这两个分区,不仅实现了数据同步,还实现了冗余,在群辉启动的时候会选择硬盘编号小的那一个进行使用。

root@ubuntu20:~# cat /proc/mdstat

Personalities : [raid1] [raid6] [raid5] [raid4] [linear] [multipath] [raid0] [raid10]

md1 : active raid1 sdb2[27] sdc2[28] sda2[26]

2096128 blocks super 1.2 [26/3] [UUU_______________________]

md127 : active (auto-read-only) raid5 sdb3[1] sdc3[2] sda3[0]

1932076416 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU]

resync=PENDING

md0 : active raid1 sdb1[27] sda1[26] sdc1[28]

8387584 blocks super 1.2 [26/3] [UUU_______________________]

unused devices: <none>

# 查看lv

root@ubuntu20:~# lvdisplay

WARNING: PV /dev/md127 in VG vg1 is using an old PV header, modify the VG to update.

--- Logical volume ---

LV Path /dev/vg1/syno_vg_reserved_area

LV Name syno_vg_reserved_area

VG Name vg1

LV UUID lzodJl-D8Td-iXu6-rM3J-l1wX-UPqH-CBEHa0

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 0

LV Size 12.00 MiB

Current LE 3

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 512

Block device 253:0

--- Logical volume ---

LV Path /dev/vg1/volume_1

LV Name volume_1

VG Name vg1

LV UUID zSHjna-JGBI-5Mml-1KCn-TiWk-S0jd-GoVpcP

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 0

LV Size <1.80 TiB

Current LE 471552

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 512

Block device 253:1

# 查看/dev/vg1/volume_1状态

root@ubuntu20:~# lvdisplay /dev/vg1/volume_1

WARNING: PV /dev/md127 in VG vg1 is using an old PV header, modify the VG to update.

--- Logical volume ---

LV Path /dev/vg1/volume_1

LV Name volume_1

VG Name vg1

LV UUID zSHjna-JGBI-5Mml-1KCn-TiWk-S0jd-GoVpcP

LV Write Access read/write

LV Creation host, time ,

LV Status available

# open 0

LV Size <1.80 TiB

Current LE 471552

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 512

Block device 253:1

# 挂载lv

root@ubuntu20:~# sudo mkdir -p /mnt/volume_1

root@ubuntu20:~# sudo mount -t btrfs /dev/vg1/volume_1 /mnt/volume_1

root@ubuntu20:~# cd /mnt/volume_1

# 读数据 成功读取

root@ubuntu20:/mnt/volume_1# ls

@database @eaDir r5_data_dir @S2S @synoconfd @SynoFinder-etc-volume @SynoFinder-log @tmp @userpreference

root@ubuntu20:/mnt/volume_1# cd r5_data_dir/

root@ubuntu20:/mnt/volume_1/r5_data_dir# ls

DiskGenius_Pro_v5.4.3.1342_x64_Chs.exe @eaDir HEU_KMS_Activator_v25.0.0.exe MonitorControl.4.3.3.dmg关键mdadm命令速查

# 查看磁盘详情

sudo mdadm --detail /dev/md0

# 启动重新同步

sudo mdadm --action=check /dev/md2

# 移除故障盘

sudo mdadm --remove /dev/md1 /dev/faulty_disk

# 添加新盘

sudo mdadm --add /dev/md1 /dev/new_disk参考文档:

群辉知识中心-什么是硬盘分区?https://kb.synology.cn/zh-cn/DSM/tutorial/What_are_drive_partitions

群晖NAS硬盘分区深度解剖:https://www.bilibili.com/video/BV1rSXAYDEJK/?spm_id_from=333.1387.upload.video_card.click&vd_source=0d612f6a789fc6b4b297cc22f0d76f2f

ubuntu 黑群晖 lvm 群辉 nas mdadm lvm2 Synology